Recognize the limitations, then plan for redundancy.

The network edge is continually expanding into new applications and new industries, and network-enabled sensors are leading the way. Metcalfe’s Law tells us that every time you add another device to a network, whatever that device’s function may be, you increase the value of the network.

Sensors demonstrate that point every day. Whether they’re measuring deformation errors in a tire plant, geo-fencing an oil pipeline, or monitoring well water quality for the U.S. Geological Survey, sensors lower costs and enhance productivity.

Each new application presents its own set of new problems. Sensors must function in a wide array of environments, and they must be able to report their data. This article will describe system design techniques that anticipate issues like brownouts and equipment failure, and prepare for them by employing solutions like local decision-making and controls, and redundant backhaul paths.

The myth of 100% uptime

All data communications installations have vulnerabilities. Fiber optic cable, for example, the option with by far the greatest range and bandwidth, is used by the telecommunications companies to move data across entire continents.

Uptime is excellent, but the system isn’t perfect. The cables are either run through sewers, where backhoes break them with annoying regularity, or they’re strung along telephone poles, where they’re knocked down by everything from windstorms to sleepy truck drivers. Indoor fiber optic connections have problems of their own. Transceivers and receivers eventually fail. Cables can be bent or broken by anything from careless forklift drivers to the cousins of the raccoons that get into power substations and shut down the grid.

Copper cable adds additional weaknesses. Any strong magnetic field can induce current on a copper cable, which will lead to power surges that can burn out sensors, integrated circuits, and connectors. Industrial machinery isn’t the only thing that can generate those strong magnetic fields. For example, a 1989 solar flare famously produced a magnetic storm that took out the power grid for all of Quebec. Lightning strikes will also produce damaging electrical events, as will the ground loops that occur when connected devices have different ground potentials.

Wireless connections are subject to failure, too. Interference from other devices on the same frequencies can lead to data loss. And radio signals attenuate with the square of distance. Merely doubling the range would require a four-fold increase in power.

No system is perfect. And the harder you try to achieve perfection, the faster your costs will rise. It’s cheaper and easier to eliminate the need for 100% uptime from the very beginning.

Rugged devices and isolation

While 100% uptime is a mirage, at least for a price that any reasonable person would be willing to pay, that doesn’t mean you shouldn’t try to do your best. Industry-hardened network devices that will stand up to off-the-desktop, real-world conditions can minimize many potential problems.

Their copper connecting cables can be equipped with isolators. Unlike surge suppressors, which only try to limit spikes between the signal and ground line, isolators allow the lines to float while keeping the local side at the proper ground and signal level. USB connections, which are becoming ubiquitous because of their usefulness, also have an unfortunate proclivity for permitting ground loops. They should be isolated as a matter of course.

Planning for downtime

Occasional glitches and communications failures are a fact of life. After you’ve done your best to eliminate the potential problems caused by equipment failure, human error, solar flares, and pesky raccoons, it’s time to start planning for what will happen when communications fail anyway.

You can solve many problems by placing enhanced intelligence and local decision making at the end point, rather than calling for unbroken connectivity with the central controller. Autonomy at the sensor’s end is a great way to get around connection glitches and variations in bandwidth. Monitoring can be done in a “store and forward” scheme where the local sensor has the ability to store data and send/resend it as needed. That is becoming easier and easier to implement. Processors keep growing more powerful, yet their power requirements keep dropping. Sensors can now be equipped with powerful internal processors as well as significant quantities of internal memory. That’s a great substitute for perfect uptime.

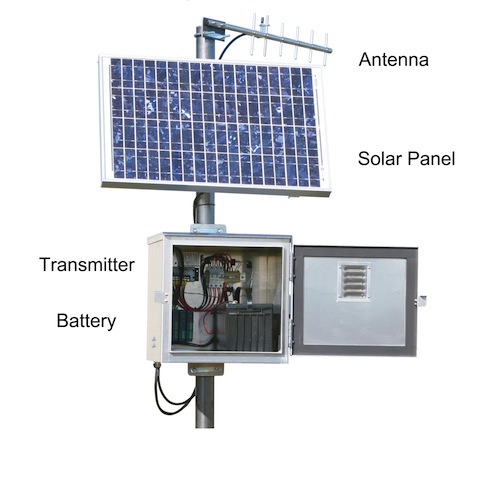

One good example would be the U.S. Geological Survey mentioned earlier. The USGS monitors well and surface water conditions at sites all over the country and uses the data to make ongoing updates to its website. As you might expect, many of its sensors are deployed in very remote locations, and in places where there is no access to the power grid and no Internet infrastructure. Data communications are handled by low-power radio transmitters. Because the transmitters have low power but must broadcast across large distances and overcome interference, there will be ongoing fluctuations in bandwidth and link quality.

Trying to achieve 100% uptime in such circumstances would be absurd. Instead, the sensors use localized intelligence to record and store data, and they send/resend until they are satisfied that the information has been passed along.

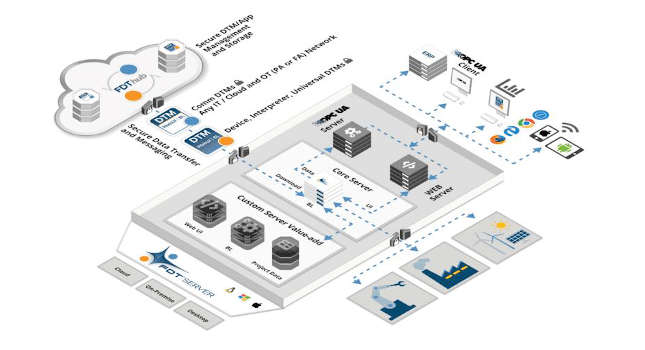

Redundant backhaul

There are many ways to connect to a network. Cable of one kind or another, with its built-in security, bandwidth, and reliability, normally forms the basic infrastructure of an industrial LAN. But what happens if you’d like to establish redundant backhaul and nudge your uptime rate that much closer to 100%? Cable installations involve a lot of labor and materials; they aren’t cheap. If the main infrastructure is already wired, you might consider using wireless as the backup. (It’s what the telecoms did while they were rebuilding their fiber optic networks in the aftermath of Hurricane Katrina.)

There are a number of ways to go about it, depending on what you need to accomplish. With advances in multiple-in, multiple out (MIMO) technology and associated developments, Wi-Fi now has very serviceable range and bandwidth capabilities. It uses license-free frequencies, which presents a financial advantage, and it is inherently interoperable with off-the-shelf wireless network adapters, laptops, tablets, and even smartphones. For many applications Wi-Fi would be a very cost-effective and uncomplicated way to establish redundant backhaul.

Wi-Fi does have range limitations. You’ll need another wireless option if you need to establish M2M communication over long distances and the connections must be under your own control. Companies like Conel, in the Czech Republic, specialize in enabling users to maintain data communications over the cellular telephone network. The packet transfer system lets users employ any station in the network as an end station or as a retranslation station, allowing for networks with complex topology and vast size. Data transfer can be encrypted, and the system will support your choice of protocols.

The technology is mature enough that 3G often serves as the backhaul for telecommunications and broadband services in countries like Belize, where a fiber build-out in difficult terrain like jungles and mountains would be far too expensive.

The goal of 100% connectivity remains elusive. But industrial-grade installations, alternative power, and redundant backhaul combined with smart sensors can get you very, very close.

Mike Fahrion, the director of product management at B&B Electronics, is an expert in data communications with 20 years of design and application experience.