Case examples use search as starting point for identifying sample sets.

We often forget how difficult it was navigating the Web earlier in the digital age. Prior to introduction of directories and search engines like Alta Vista, Yahoo, and Google, finding the information you needed amongst the vast data stored on the Web was time-consuming and frustrating.

The same applies to companies looking to leverage the data collected by their various computer systems. Companies are good at amassing data, but have lacked affordable, effective tools to search for actionable information.

Data historians, introduced in the 1980s, for example, store process data and generate reports, but they were never meant to be easily mined or have their data visualized for predictive analytics.

The Industrial Internet of Things (IIoT) is an opportunity to improve business intelligence and boost efficiencies using data-driven analytics. IIoT introduces new types of data and formats, increases data volume, and transforms operational decision making from reactive to proactive. However, traditional architectures and processes may not deliver the desired results.

It’s not about “seeing everything” anymore. Enormous data amounts quickly overwhelm any system without the means to easily locate specific information. Given this realization, early attempts to transform raw data into actionable information relied on data modeling. The problem is that modeling usually requires complex IT projects staffed with data scientists. Such projects may be time-consuming and expensive.

The Google for Industry concept was invented in 2008 by former process engineers from Covestro, formerly known as Bayer Material Science. The approach they developed, based on process-industry experience, allows easy access to historian information.

These engineers saw the limitations of analytics models for scaling-up beyond pilot projects. They, like many others, wanted industrial search to work as Google does in our daily lives.

Conceiving Google for Industry

Data modeling requires experts and is time-consuming. Moreover, the resulting models are sensitive to change and inflexible. In data modeling, the following steps pertain:

- Preparation

- Modeling

- Validation

- Going live.

Each time a model is changed, the cycle is repeated. Not only is data modeling time-consuming, it’s not appropriate for dynamic processes because it often is based on assumptions about stationarity and data distribution that do not hold in a real process with variables.

In an early project, a chemical plant manager struggled with significant variance among operator teams, which had direct impact on throughput. He looked to the data to learn what the outstanding shift operator team was doing differently. Laborious searches were performed through the process-data history to determine what “manual actions,” when taken in appropriate circumstances, led to better or worse plant performance.

The limitations native to this approach were the impetus to creating a solution that replaced the labor-intensive data modeling approach with pattern recognition.

Ever wondered how Shazam, an application that identifies songs from a small sample, works? Shazam looks for patterns in the song that match patterns in its database. What’s more interesting is it ignores most of the song’s millions of “data points” to focus on a few intense moments or “high energy content.” This approach might seem fraught, but Shazam is accurate even in environments with lots of background noise.

Shazam succeeds because it searches for and recognizes distinctive patterns, rather than seeking to match all aspects of a song, and it does so quickly. In other words, the key to enabling big analytics is to focus on patterns, not to try and crunch all the data.

Like Shazam, but in a more complex environment, Google for Industry allows sophisticated searches of process data in which advanced pattern recognition algorithms find either similar or deviating behavior. Users choose a reference period pertaining to one or more tags. The system finds similar behavior throughout the entire data history.

For example, a chemical plant suffered intermittent quality problems due to unclean product. A predictive analytics search revealed that a peak flow rate preceded a temperature peak. The temperature peaks stressed the heat exchanger seals. If those seals break, there is leakage between sterilized and unsterilized product, which causes quality problems.

The causal relationship between flow rate and temperature was tested and confirmed with a similarity search. Additionally, a monitoring pattern was configured to alert the team when similar patterns occurred, prompting control retuning to diminish seal degradation.

Viable patterns exampled

The Google for Industry concept grew quickly. Search capabilities beyond looking for similar past patterns were still wanted. Search capabilities therefore were extended to all relevant parameters: behavior patterns, slopes, operator actions, certain switch patterns, conditional, or Boolean conditions based on tags, drift, oscillations of a certain frequency, anomalies, event frames, context, and more.

Thus, in the case example under discussion, Google for Industry started with search as a first step. Further analysis led process experts to a hypothesis concerning a causal relationship that explained the quality issues with the heat exchanger seals.

Due to fouling, a batch may take longer than the normal 12-13 hours. In the past, a switch was made to a spare heat exchanger after two to three days, but this could foul as well. Thus, it could take two weeks or longer to clear a fouling problem.

With predictive analytics, monitoring alerts come two to three weeks in advance, and are confirmed by the operator. Detected in advance, appropriate action is taken, avoiding about 10 hours of production loss every two to three months, plus extended turnaround time. Moreover, heat exchanger fouling caused longer cooling times. Contextualized cooling time patterns were monitored so as to generate maintenance alerts.

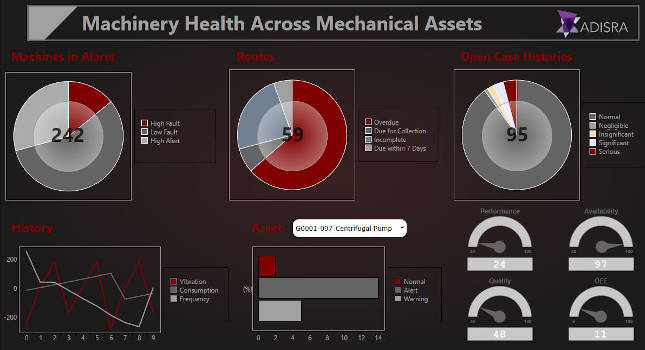

The software application was developed using a high-performance discovery analytics engine for process measurement data to deliver the power and ease of Internet search engines to industrial applications. Through an intuitive Web-based trend, users start searching for trends with patent-pending pattern recognition and machine learning technologies.

Using value or digital-step searches for filtering or for finding something that looks similar is just the start. Process engineers are enabled to search for particular operating regimes, process drifts, operator actions, process instabilities, or oscillations.

Root cause analysis is important to keep operations running as efficiently as possible. The causal and influence factors that search algorithms illuminate show users underlying reasons for process anomalies or deviating batches.

Comparing behaviors with anomalies and normal operating periods can be a painful process. The software instead uses advanced search and diagnostic capabilities that compare large numbers of transitions, while focusing on both equalities and differences. This is a fast, accurate analysis of a continuous production process.

Live displays let users see process values evolving and the application predicts the most probable future evolution based on matching historical values. In this real-world example, impurity concentration can be predicted. Typically, lab analysis quantifies the resulting quality before product is released to an internal customer.

This lab analysis represents an additional cost and delay. Using predictive analytics software, a process expert can create and deploy a predictor for an impurity concentration. Sensors give an early indication. As a result, truck loading can start earlier, lead time is shorter, and the probability of rejection due to off-specification conditions is decreased. On-the-fly detection of early indicators can spot process variations.

Analytics and decision making

It’s about optimizing operations through proactive decision making, not just examining past process behaviors. Primary is the means to capture information from multiple users, including process engineers, operators, maintenance staff, and others, in a single environment connected to relevant process data that furnishes context.

The next step is creating a golden profile, using search features to find the best transitions or batches of a given type, from multiple historical transitions. The golden profile is used for predictive monitoring. Users take a live view of a process and apply it over the golden profile to verify that recent changes produce results as expected. This allows users to proactively adjust settings to reach optimal performance or to test changes for the right results before implementing them.

In addition, the application provides sophisticated alarm capabilities. Instead of sending an alert, it supports configuration of meaningful alarms to prevent problems from happening. A process engineer can review the operator annotations pertaining to a problem by searching for comparable past anomalies. Based on the overlay, the engineer can determine alarm limits to prevent similar future occurrences.

Advanced capabilities can simplify making proactive decisions based on search capabilities supported by predictive analytics.

Bert Baeck is the CEO at TrendMiner.