A robot has learned to visually predict how its partner robot will behave, displaying a glimmer of empathy, which could help robots get along with other robots and humans more intuitively.

A Columbia University Engineering robot has learned to predict its partner robot’s future actions and goals based on just a few initial video frames. When two primates are cooped up together for a long time, we quickly learn to predict the near-term actions of our roommates, co-workers or family members. Our ability to anticipate the actions of others makes it easier for us to successfully live and work together. In contrast, even the most intelligent and advanced robots have remained notoriously inept at this sort of social communication. This may be about to change.

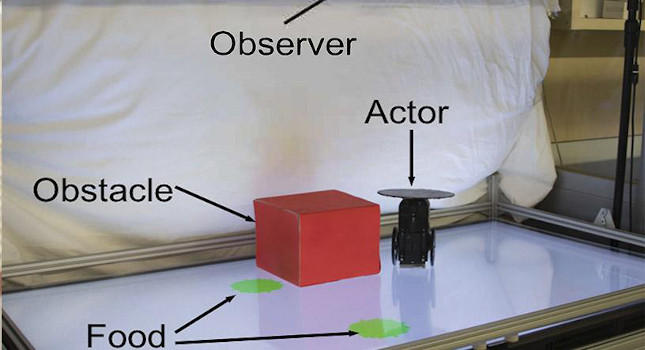

After observing its partner puttering around for two hours, the observing robot began to anticipate its partner’s goal and path. The observing robot was eventually able to predict its partner’s goal and path 98 out of 100 times, across varying situations—without being told explicitly about the partner’s visibility handicap.

“Our initial results are very exciting,” said Boyuan Chen, lead author of the study, which was conducted in collaboration with Carl Vondrick, assistant professor of computer science, and published by Nature Scientific Reports. “Our findings begin to demonstrate how robots can see the world from another robot’s perspective. The ability of the observer to put itself in its partner’s shoes, so to speak, and understand, without being guided, whether its partner could or could not see the green circle from its vantage point, is perhaps a primitive form of empathy.”

Predictions from the observer machine: the observer sees the left side video and predicts the behavior of the actor robot shown on the right. With more information, the observer can correct its predictions about the actor’s final behaviors.

In addition, humans are still better than robots at describing their predictions using verbal language. The researchers had the observing robot make its predictions in the form of images, rather than words, in order to avoid becoming entangled in the thorny challenges of human language. Yet, Lipson speculates, the ability of a robot to predict the future actions visually is not unique: “We humans also think visually sometimes. We frequently imagine the future in our mind’s eyes, not in words.”

Lipson acknowledged there are many ethical questions. The technology will make robots more resilient and useful, but when robots can anticipate how humans think, they may also learn to manipulate those thoughts.

“We recognize that robots aren’t going to remain passive instruction-following machines for long,” Lipson said. “Like other forms of advanced AI, we hope that policymakers can help keep this kind of technology in check, so that we can all benefit.”

– Edited by Chris Vavra, web content manager, Control Engineering, CFE Media and Technology, [email protected].