Emerson’s Steve Hassell discusses how to protect your system and better utilize use your data.

A recent technology report issued by Emerson Network Power, a division of Emerson, discussed four emerging models that will manage data centers around such issues as computing capacity, sustainability, and cybersecurity. According to Emerson, those four models are:

The Data Fortress: Taking a security-first approach to data center design, deploying off-network data center "pods" for sensitive information.

The Cloud of Many Drops: The Emerson report notes, "despite virtualization-driven improvements, too many servers remain underutilized. Some studies indicate servers use just 5% to 15% of their computing capacity … We see a future where organizations explore shared-service models, selling some of that excess capacity and in effect becoming part of the cloud."

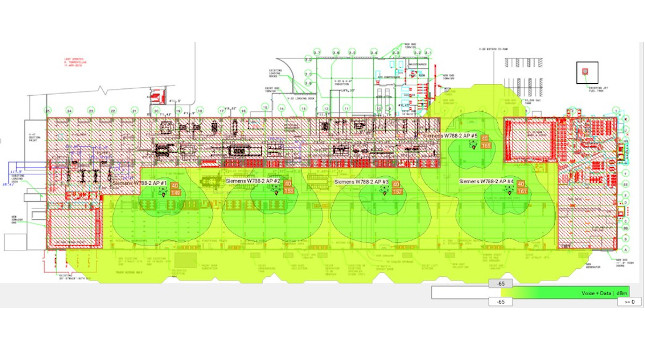

Fog Computing: "Introduced by Cisco, fog computing connects multiple small networks into a single large network, with application services distributed across smart devices and edge-computing systems to improve efficiency and concentrate data processing closer to devices and networks," the report states. "It’s a logical response to the massive amount of data being generated by the Internet of Things (IoT)."

The Corporate Social-Responsibility-Compliant Data Center: The effort to reduce carbon footprints "are pushing the focus toward sustainability and corporate responsibility. The industry is responding with increased use of alternative energy in an effort to move toward carbon neutrality."

Steve Hassell, president of data center solutions for Emerson Network Power, discussed with CFE Media the report and its implications for manufacturing at a time when cloud computing and cybersecurity are two of the most pressing topics as manufacturers move into the era of the Industrial Internet of Things (IIoT):

CFE Media: What are the security challenges of bring-your-own-device (BYOD) users that are different from deliberate attacks from hackers. How should manufacturers address this BYOD security issue?

Hassell: When you look at BYOD and users, there are two different issues than what you see with an internal hacker: the ability to directly load information, and viruses or malware on the device.

The first issue revolves around both the intent of the BYOD user and the ability of their device to remove information from the enterprise. Virtually every device has some ability to make a copy of information from the enterprise either purposefully or in the background. The copies of this information create a number of issues, especially from an IP-protection and legal-compliance standpoint. The most effective way to prevent this type of information leakage is by using some form of virtual client on the BYOD device to basically eliminate the accidental transfer of data and make purposeful removal more difficult.

The second issue involves the spread of a virus or malware when an infected BYOD is connected to the network. Again, the use of a virtual client can help dramatically because you can still allow complete access to corporate information without allowing direct connectivity to the protected network.

CFE Media: Underutilized servers are one issue. Underutilized data is another. How do you view the data-management issue in manufacturing, and how can cloud computing help?

Hassell: There are two main sources of underutilized data: access to the data and the ability to find the data. The cloud can certainly help the first issue by providing more ubiquitous access to data no matter where a user is located.

The second problem is much harder. While a lot of articles have been written about the ability to use large, unstructured "lakes" of information (e.g., "Big Data"), the vast majority of users will still need some way to be able to search for the data they need and will access it through what is called a "direct query." Techniques like master data management (MDM) overlay a certain amount of structure across an organization’s data so that it can be found through conventional tools.

The problem with MDM always has been (achieving) the discipline required from all users combined with the overhead (personnel and systems) to accomplish MDM. There are a number of new tools that help with this function, but it’s still a big problem.

CFE Media: What are some circumstances that might lead a company to retain an on-site data center as opposed to using the cloud?

Hassell: There are a number of reasons why a company may choose to retain some or all of its data on-site. Security and regulatory concerns are two reasons that are most often cited, but application types or architectures and personal preference also play into the mix.

It’s also important to recognize that this really isn’t an "either-or" discussion. Hybrid approaches including owned, co-location, and cloud are increasingly popular and will probably dominate for at least the near term.

CFE Media: What’s your assessment of current data capacity? Are we keeping pace with the growth of data?

Hassell: From what I have read, there are about 8 zettabytes (or 8 trillion gigabytes) of data today, and that number is expected to grow to about 35 zettabytes by 2020. I think that technology is doing a very good job of dealing with the raw storage capacity of data, but having tools that allow someone to search, correlate, and access all of that data will be a critical element to turning all that data into useful information.