Virtualization efforts pave the way for predictive analytics

Virtualization insights

- The global manufacturer shifted from manual data collection methods, which were labor-intensive and posed cybersecurity risks, to an AI-driven approach for better process quality and reliability.

- By reducing manual troubleshooting tasks, the manufacturer’s personnel could focus more on value-added activities, enhancing both production efficiency and product quality.

- Hargrove Controls & Automation advocated for starting with small-scale projects to demonstrate concept viability and ROI, before scaling up for larger implementations, thus ensuring effective budget justification and project success.

An ongoing multi-site program at a global manufacturer collects and analyzes production data using relatively common Industry 3.0 technologies and newer Industry 4.0 concepts, including cloud connectivity, AI-based analytics and predictive reliability.

As a result, a system integrator and engineering firm, together with the asset owner/operator, can make informed, data-driven control system and process improvements based on system feedback.

A need for better

To begin, the global manufacturer recognized the need to improve visibility of the processes running in its plants. In addition, its data collection methods were labor-intensive and posed potential cybersecurity risks. Moreover, process quality and reliability needed improvement.

Process data was manually recorded from human-machine interfaces (HMIs) by operators using paper and pencil. Often, the documentation was routinely filed away until a problem arose. If an event did occur, employees delayed acting as they sought to decipher the information by sifting through the paper documents.

In addition, methods for other local data collection needed improvement. Engineers walked around the plant floor with a USB stick to move data from shop floor computers to their office laptop. They only retrieved data when a problem needing to be addressed was identified. This was also a significant cybersecurity issue.

In addition to data-visibility challenges, the manufacturer recognized it faced certain labor constraints. It understood that by reducing the time employees spent putting out fires, or troubleshooting to get production running, their focus on value-added tasks would increase.

Keeping equipment running has an immediate impact on the bottom line that management can see. However, a well-run plant also allows personnel to spend more time working on continuous improvement to top-quality products.

The manufacturer wanted to identify the reasons for its quality issues and for the reliability problems it faced. It was aware of the increasing efficacy of Industry 4.0 initiatives and witnessed other companies in different sectors advancing with data-analysis efforts. The manufacturer asked Hargrove Controls & Automation to develop a roadmap and execution plan to improve its data retrieval and analytics strategy.

Hargrove Controls & Automation planned and executed the job employing experienced personnel spanning a range of engineering and technical disciplines.

Data-driven decision making

To relieve the manufacturer’s IT management burden, Hargrove used both VMware and Microsoft HyperV virtualization technologies to reduce the company’s hardware footprint.

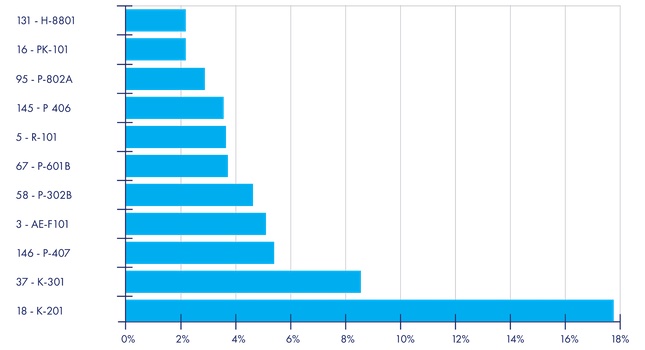

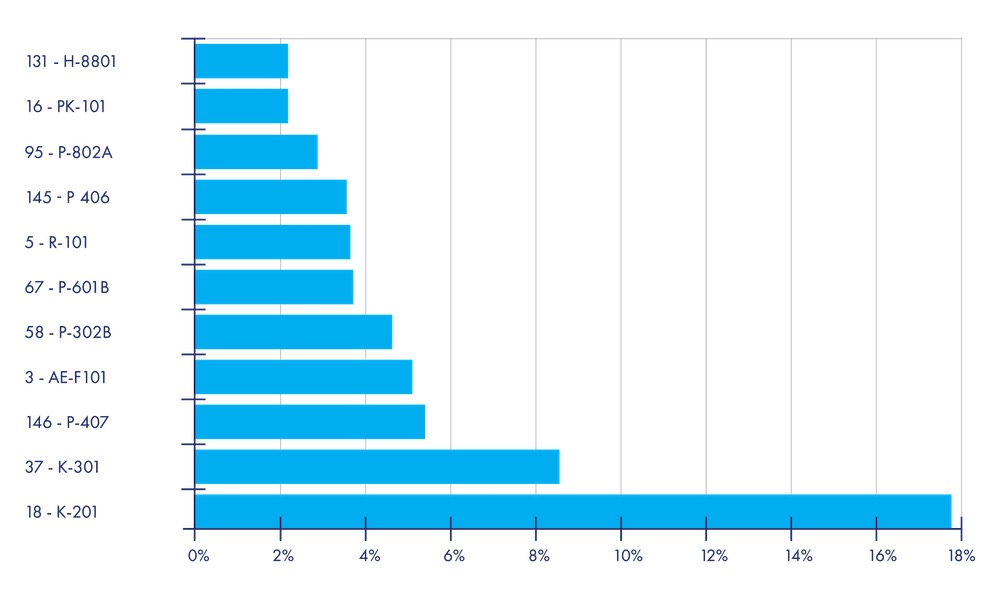

In addition, the company selected a cloud-based industrial artificial intelligence (AI) platform to generate insights into running processes. As a result, process data was analyzed by AI to provide real-time insights into how the process and associated equipment are running. Beyond the cloud data visualization tools, Hargrove Controls & Automation’s upgrades allowed company engineers to locally review data and trends to discern correlations on their own. At the same time, the AI engine sifted through variables to find nuanced interactions among parameters that might be difficult for onsite engineers to recognize.

With AI supporting data analysis from the newly connected machines, the company had better tools for predicting downtime and understanding what machines needed maintenance support. Data can point to mechanical causes such as the need for a bigger heat exchanger, an instrument calibrated incorrectly or a motor not running fast enough. Once known issues are mitigated, the data validates that the changes made addressed the problem.

When gaps and challenges are addressed, efficiency improvements are realized. With more reliable equipment, a facility sees improved production throughput since machine uptime increases and variability decreases. Quality control improves, saving money by reducing off-spec product, avoiding wasted material and manpower on product that ends up scrapped or reworked.

Digitalization journey

The manufacturer achieved production efficiency gains through improved quality control. Reducing manual tasks improved worker efficiency. The company is still in the process of digitalizing its facilities. To date, about a half-dozen facilities have received some type of improvement with the help of Hargrove’s team, with plans to continue the partnership.

The manufacturer started as a company driven by the skill and experience of its employees. It is transforming into a data-driven company. It knows that the labor force is changing. Newer employees may not share the same ideas about tenure as previous generations. The manufacturer will always count on the people it employs, but now supported by optimized processes and equipped with data for informed decision making.

The end goal of digitalization and Industry 4.0 is to have a single process portal for process, business, maintenance and laboratory data, collated and analyzed by both people and machines in a single platform.

Actionable information comes organized and, in many cases, filtered and summarized for an immediate, clear picture, not only of its processes, but of the business as a whole. Useful, available technology lets companies implement for real, tangible value. The factory of the future won’t appear overnight, but with the right partners and resources, having a roadmap to get there is increasingly imperative.

Justify the budget based on results analytics

It can be a challenge to document return on investment (ROI) for a software project. It’s about in-process improvements that may not be apparent to those looking only at the bottom line that for any number of reasons is subject to change. How can managers prove that savings will more than pay for the project?

Explaining ROI for software projects may not be straightforward. Still, real dollars are involved. You can calculate, for example, the cost of a shutdown or of making a thousand kilograms of off-spec material per year. The key is to set realistic assumptions for process improvements that can be compared over a defined period to past performance.

Hargrove Controls & Automation recommends starting with a small-scale project first, to prove the concept, and scaling up for a larger rollout once ROI has been proven to all stakeholders.

We can discuss self-funding concepts that help companies justify and budget for transformational initiatives based on results from preliminary project rollouts.

Fast fact

Virtualization is a computing technology that creates virtual representations of physical machines. Virtualization uses software to simulate hardware functionality. This allows multiple virtual machines (VMs) to run simultaneously on a single physical machine.