With a GPU-accelerated database, organizations evaluate larger data sets.

Oil & gas companies rely on a variety of data sources to inform their business decisions. In today’s industrial environments, the data collected includes more real-time data sets, such as higher velocity streaming drilling information.

When it comes to the modeling of basins, geophysicists, petro physicists and geologists all contribute to the collection of this vast data set. Because they require a certain degree of confidence that the locations chosen for drilling will generate substantial extraction of energy resources, many organizations want to accurately model subsurface reservoirs before beginning any actual well drilling or development.

From a data perspective, the more models generated and the more granular they are, the better and more profitable the decisions taken by the company. However, generating granular models requires a massive amount of data ─ currently a major challenge for these organizations, as they are ill-equipped to process and analyze data at scale.

GPU-enabled acceleration

Oil & gas companies operate like any other enterprise: they tend to store all the data generated across the organization ─ from well sensors to drilling information ─ in traditional data lakes within an enterprise data warehouse, like Hadoop, HDFS or Cassandra, or across distributed database systems. While some companies are migrating to cloud warehouses, like Snowflake, or static formats, including Azure Blob Storage or Google Cloud Storage, the challenges remain the same – these methods were primarily developed for storing data. As a result, they are unable to process the data collected, creating bottlenecks and inefficiencies for organizations. This slows down the well-vetting process and, ultimately, resource extraction.

When leveraging a GPU-accelerated database for basin modeling, companies get an MRI-like image of the earth subsurface, visualizing 100 billion data points instantaneously, for a higher level of granularity and confidence. Courtesy: Kinetica[/caption]

Let’s say you are examining a basin model, filtering the properties ideal for deciding well placements, and something interesting is identified on the basin’s west side. By being able to filter the sought-for properties, natural resources will be extracted in a much more responsible, safe and efficient manner, giving engineers information about the best location to drill the wells with minimal effort.

Steps to a data analytics solution

For oil & gas companies to take full advantage of a data analytics solution, a couple of steps are mandatory. First, because these organizations typically get information from a wide variety of sources, they must standardize their data sets.

Data analysts receive models in anywhere between five and fifteen different formats, which can vary greatly in terms of quality, density and source. To gain the best insights, organizations must create a truly authoritative and standardized system of record for whatever data they are collecting and storing, whether it be basin models, well data, or any other type of data they collect.

Second, organizations must create a standard around the different properties known to be relevant for a particular basin. Having a standardized catalog across all data sets is critical. Take the Delaware Basin or the Permian Basin in Texas – what are the specific characteristics of the reservoir that can provide concrete clues as to where to place a well in order to do exploration?

Third, after standardizing and setting properties, analysts must think about how to represent and use the data. Users must be able to effectively interact with the data to derive decisions that help the business. Questions to think about include what the front end is going to look like and what tools analysts will use to deliver the best, readily accessible information to the petro physicists and geophysicists, so that they can analyze and extract information with relative ease, arrive at results that maximize business impact.

Infrastructure required

To deploy an effective data analytics and data-driven solution, oil & gas organizations need a GPU-powered platform. Organizations leveraging GPUs take advantage of the computing power and low-latency performance to analyze vast swaths of data.

Another aspect of a good solution involves having efficient, lightweight, and clean user-interface and user-exploration tools that give end users (in the modeling example, geophysicists and petro physicists) the power to make good decisions about where organizations are going to spend millions or even billions of dollars to build out assets, purchase land, build wells, and establish the human infrastructure and human resources needed to propel hydrocarbon extraction.

Establishing an effective data streaming pipeline is also key, whether it is static data called from multiple sources, real-time drilling data or streaming data coming directly from the field via connected Internet of Things (IoT) devices.

Anadarko case study

Anadarko, one of the world’s largest oil and natural gas exploration and production companies, leveraged a GPU-based analytics platform that combines a GPU-accelerated database, streaming analytics, location intelligence, machine learning-powered analytics, smart applications, and cloud-ready architecture.

To get started, Anadarko partnered with Kinetica to customize the platform to its needs. Kinetica then leveraged Google Cloud Platform (GCP) as a virtual infrastructure to be able to stand up to a fifteen-node Kinetica environment on GCP that’s using GPUs, and leveraging Google Cloud Storage as the static persistence of Anadarko’s data sets, whether models, wells, or any other data types the company may leverage.

In this case, Google Cloud Storage is acting as the authoritative data source. From the Kinetica side, the team is leveraging its 15-node connected cluster to quickly and rapidly ingest data from Google Cloud Storage into the platform, especially as data changes become rapidly available. Use of the platform and GPUs accelerates the output of Anadarko’s data scientists and geophysicists, as they run GPU-accelerated models that make faster spatial and economic predictions. This allows the geologists and petro physicists to do their analysis on the incredibly dense datasets in the most effective manner, identifying where and how to extract the resources from the reservoirs in the most cost-efficient, time-efficient, safe and responsible manner.

With the analytics platform, Anadarko did the impossible – render a high-fidelity, 3-D view of the Delaware oil basin using 90-billion data points at scale. To put this into perspective, Anadarko moved from a low-fidelity (approximately nine billion data points) view of the basin, with the visualization equivalent to the size of Harvard University (approx. 20 acres), to a high-fidelity view (approximately 90-billion data points) that is equivalent to the size of the state of Massachusetts and three times the depth of the Empire State Building. End users get the most granular view at the basin, to make the best possible decisions.

The end users, geologists and petro physicists are responsible for delivering recommendations to the business, used to decide whether, when and where to purchase land to set up operations, build wells and so forth. For a company like Anadarko, being the first company to act on where to purchase or lease land is significant. It is typically a 500x to 700x cost difference between being the first company to purchase or lease land ─ especially when we’re talking about hundreds of thousands of acres at a time ─ and the second. That is, once it’s known that an organization is buying up land, all the other oil & gas companies jump on it as well.

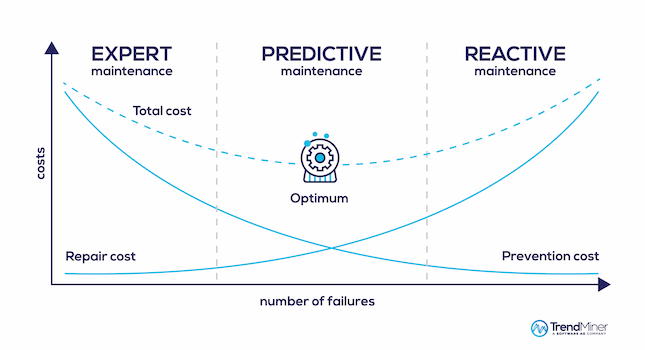

Innovation in IoT and edge

The Internet of Things (IoT) and edge computing have accelerated innovations in the oil & gas industry, especially for companies that are data driven. With IoT and edge computing, companies establish real-time well monitoring, uncover drilling information, or vet expensive equipment. For example, IoT-enabled sensors can capture information on equipment conditions, which then is fed into a data storage solution and analytics platform, which is analyzed at the edge on the equipment itself, instead of being sent to a centralized data warehouse. This allows data analysts as well as end users to receive the data in real time, enabling companies to predict equipment failures before they happen, to minimize downtime. With these predictions, the companies can identify when preventative maintenance is needed, ahead of the issue occurring, avoiding very costly repairs and maintenance expenditures.

IoT and edge computing also enable real-time streaming of drilling information, where companies can be informed almost immediately when they’ve broken into a different type of soil, based on the geologic time-series data about how far they are digging. Being able to identify that information and report on it is significant in terms of understanding how far the drilling team has gotten in the extraction operation.

Technologies like active analytics, IoT, and edge computing have allowed oil & gas companies to effectively make better decisions for their businesses, from figuring out where to build wells to monitoring and predicting expensive equipment failure. In order to stay competitive, these companies must realize, and ultimately leverage, the most important asset they have: their data.